I Built a Tweet Generator That Actually Works

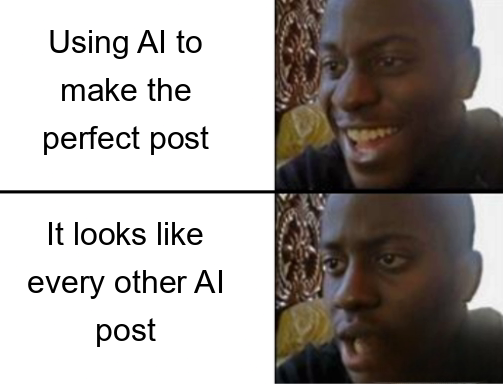

Most AI tweet generators have the same problem: they produce content that screams "I used ChatGPT." You know the type—generic "Plot twist:" threads and "Most people don't realize..." openers that make everyone sound identical.

I took a different approach: instead of just prompting an LLM with a topic, I built a system that learns from two sources simultaneously.

The Two-Rulebook System

Personal Style Rulebook (PSR): Analyzes the user's existing posts to extract their voice—tone, pacing, word choices, quirks. Not templates, but the feel of how they write.

Quality Post Rulebook (QPR): Studies viral tweets from creators they follow. Extracts principles rather than copying structures: what makes hooks work, how proof builds credibility, why certain angles resonate.

When generating, both rulebooks get passed to the AI with instructions to balance them. Users control the weight of each side.

The Content Quality Problem

The trickiest part wasn't generation—it was filtering. Most APIs return everything: promotional posts, "GM" tweets, event announcements. I built a scoring system that evaluates text substance, content value, and penalizes low-quality patterns:

const calculateContentQuality = (tweet) => {

let score = 0;

const text = tweet.text || '';

if (textLength >= 100) score += 30;

else if (textLength < 10) score -= 20;

// Penalize low-value patterns

const lowValuePatterns = [

/^(gm|good morning)[\s!]*$/i,

/live\s*(now|soon)/i,

/join\s*(me|us)/i

];

for (const pattern of lowValuePatterns) {

if (pattern.test(text)) {

score -= 30;

break;

}

}

return Math.max(0, score);

};Althought it is just one of hundred filterings, this alone improved output quality dramatically.

Implementation Details That Mattered

Character limits: X varies by tier (280 for free, more for paid). The system checks subscription status and adjusts prompts and validation accordingly.

Conversation history: Initially tried summarizing feedback—lost too much nuance. Passing full conversation history to Claude's context window worked better.

Format consistency: Getting consistent, parseable output took iteration. The prompt explicitly shows required format and what NOT to do. Even then, parsing needs defensive handling.

Storage strategy: Each draft creates an actual Tweet document with status "draft." Users manage them through existing flows—edit, schedule, track. The chat stores references, not duplicates.

What Didn't Work at First

Content selection: Grabbing the most recent 40 posts meant bad posting weeks ruined the rulebook. Now prioritizes viral content, but smarter engagement-based selection is needed.

Token usage: Passing full rulebooks every time is expensive. Analysis showed intelligent truncation could reduce usage by 40-50%. Haven't implemented caching yet.

Draft diversity: Sometimes outputs were too similar. Temperature helps, but multiple passes or explicitly requesting different angles would work better.

What I Learned

Good AI-assisted writing isn't about perfect prompts. It's about understanding constraints (character limits, token costs, format consistency) and building defensively around them.

The two-rulebook concept is sound, but execution matters more. Small details—how you parse responses, when you cache data, how you handle failures—determine whether the system feels reliable or flaky.

The interesting paradox: By systematically analyzing what makes content engaging, the tool helps people be more authentically themselves. It's not about copying—it's about understanding why communication patterns work and adapting them to your unique perspective.

Users aren't becoming clones. They're becoming better versions of their own voice.

What's Next?

I'm exploring how these patterns apply beyond Twitter—LinkedIn posts, newsletter writing, even technical documentation. Currently expanding SupaBird to handle these different content types while maintaining the same principle: effective communication follows learnable patterns, but authenticity can't be automated.

If you're interested in the technical details or want to discuss the ethics of AI-assisted content creation, I'd love to hear your thoughts.